When it comes to building content that helps you achieve your SEO objectives, ”good” isn’t good enough. Your content has to be tailor-made to achieve your goals. It has to target the right keywords for the right audiences, supply the best information to satisfy the intent behind the queries, and help guide your audience to where they need to go next.

This doesn’t happen by chance. Thoughtful preparation and direction will help ensure that writers are able to create content that achieves its goals. Developing an SEO content brief is an important step, providing clear and data-informed drafting inputs that will guide the drafting process.

SEO content briefs: 8 key elements

Whether you’re planning and drafting content internally, or working with a partner for either or both, creating detailed briefs is a great way to transfer knowledge and collaborate. The content brief should ideally represent the expert insight of SEO professionals, setting the published page up for maximum impact in search.

These eight inputs are essential to a comprehensive yet concise SEO content brief.

Primary keywords

Generally speaking, every piece of SEO-driven content should target one or maybe two primary keywords, identified as part of your keyword strategy. These are the search engine result pages (SERPs) you want your brand to show up on. At TopRank Marketing, our briefs include keyword data derived from SEO analysis tools to help writers understand the context, SERP landscape, and intent behind these primary keywords.

Semantic keywords

Each primary keyword you will try to rank for has several semantic keywords connected to it. These are words semantically related to the primary keyword (think “bread,” “bacon,” “lettuce,” and “tomatoes” for the primary keyword “blt sandwich) that have become so frequently associated with the subject matter in other content online that search engines have learned to look for them when crawling for the primary keyword. If you include these semantic keywords in your content, Google will tend to find it faster and reward you with higher rank.

Conduct research to identify the most common semantic keywords associated with each primary keyword, then provide them as a list for the writer. Wherever natural, it’s valuable to include these phrases in copy and headers.

Audience insights

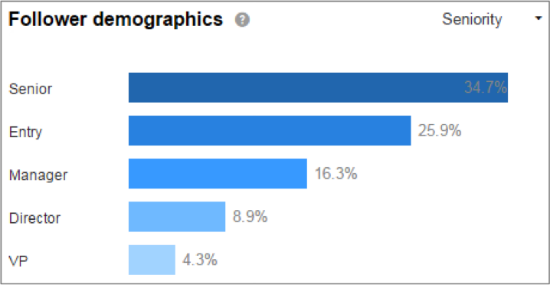

When it comes to content planning, everything begins and ends with the audience – including your approach to SEO. This section of the brief should provide information about who the content is intended to reach. Striking direct resonance with your intended audience is as important as any technical factor when it comes to ranking in (and getting results from) SEO.

Audience insights shared in your brief might include:

- Demographic info

- Buyer persona

- Job seniority

- Role or function

- Pain points

Search intent

Tools like Semrush generally sort the search intent of a keyword into one of four categories: informational, transactional, navigational, or commercial. While this provides a good start to understanding intent, it hardly tells the whole story.

Take the next step by elaborating on the common motivations for running the search query. Reviewing and analyzing the SERP can be very useful in this regard, because Google is telling you the results it (and thus searchers) find valuable. Speaking of which…

SERP features

While the most common feature of SERPs is a list of site links that pertain to the key phrase the searcher typed in, those links are not the only feature of most SERPs. Other SERP features include sponsored content, reviews, video carousels, “people also ask” sections, featured snippets, local pack listings (for businesses relevant to the query), and more. These features appear in SERPs based on how useful the search engine dictates they would be to the user.

Like semantic keywords, planning for SERP features can help inform how content is structured. For example, if you want to win a featured snippet, you’ll want to include a single paragraph beneath a clearly labeled header that comprehensively answers the question posed by the query in no more than 52 words.

Content objectives

Researching the attributes above should give you a good idea of what you’ll need your content to do and why. Summarize your findings here in the form of clearly articulated, measurable content objectives. (For example, “this blog post is targeting an upper-funnel keyword and is intended to grow brand awareness via newsletter sign-ups.”)

Content outline

In this section, provide an outline of the structure of your content. Include your title, meta description, recommended word count, headings and subheadings, and details about what should be covered in each section.

Linking recommendations

Links are vital to helping search engines understand how your content is organized and what it’s about. A smart link building strategy will account for internal linking (links to and from the new page on your own website) as well as outbound links and backlinks.

Be strategic in your approach to SEO

The more legwork you put into your SEO content brief, the easier the content will be to draft and publish, and the more effectively the completed work will serve your overall SEO strategy. Take the time to build out these briefs for every piece of content you create and you’ll start to see the benefits in no time.

When you’re ready to take your SEO content to the next level, check out our blog post: SEO Content Strategy: From Basic to Advanced.

The post Make Sure to Include These 8 Elements in an SEO Content Brief appeared first on TopRank® Marketing.

Source:: toprankblog.com