B2B video marketing has emerged as a powerful strategy for engaging with business decision makers and driving business growth. By incorporating video into your B2B marketing strategy, you can effectively communicate your brand message, nurture leads, and establish your company as an industry expert.

Let’s explore how video marketing helps B2B marketers and how to develop your own B2B video marketing strategy.

What is B2B video marketing?

B2B video marketing is the use of promotional video content to capture the attention of business decision makers. This type of marketing content is most often found on social media platforms, but it can be used in a wide variety of contexts, from streaming services to digital out-of-home (DOOH) platforms. LinkedIn recently launched a dedicated CTV advertising product, signaling a bellwether moment in the growth of video marketing for B2B.

Unlike B2C video marketing, B2B video marketing is not confined to short, flashy advertisements. While TV-style ads do have a role in B2B marketing, the unique nature of the business buying process means that there is room for a much wider variety of video content.

Some examples of B2B video marketing content includes:

- Thought leadership

- Product demos

- Tutorials

- Live-streams

- Webinars

- Short-form social videos

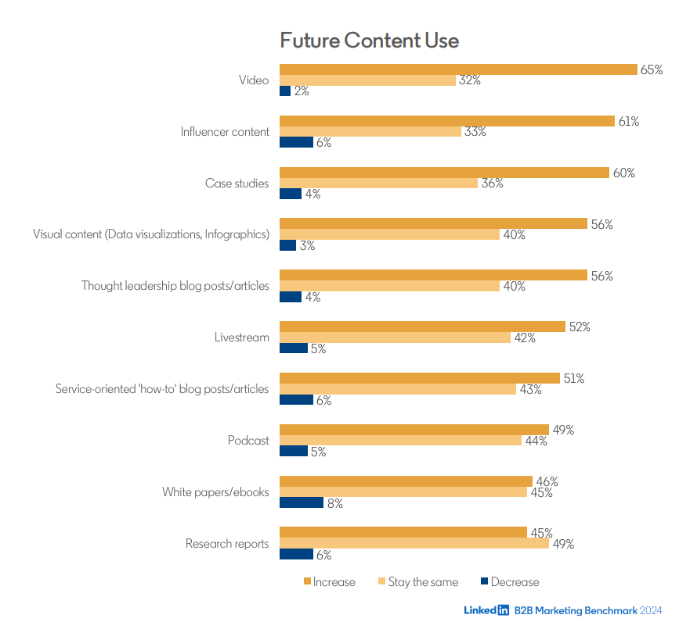

LinkedIn’s 2024 B2B Marketing Benchmark report found that video is both the top content type currently used by B2B organizations, and also the leader in planned usage going forward.

How does B2B video marketing add value for your brand?

Video marketing is generally more time and resource intensive than other approaches, so it’s fair to ask: what value does video add? And the answer is clear: video adds a lot of value in a lot of ways. Respondents in CMI’s B2B content marketing trends research found that video was in a two-way tie with case studies for most effective content format. Here are some benefits that make B2B video marketing indispensable.

Enhanced brand awareness and recognition

Compelling video content is among the most effective ways to capture attention and leave a lasting impression on potential customers. By showcasing your company’s expertise, thought leadership, and customer success stories, B2B video marketing can elevate your brand’s visibility and make it more recognizable in the competitive B2B landscape.

Strengthened brand reputation and credibility

High-quality video production and engaging storytelling can foster trust and credibility among business decision makers. By demonstrating your company’s understanding of industry challenges and its ability to provide solutions in a polished video format, you can establish your brand as a reliable and trustworthy partner.

Improved lead generation and nurturing

Engaging video content can effectively attract potential customers and nurture them through the sales funnel. According to research from LinkedIn, video content is shared 20x more on their platform than any other content type. Combined with video’s ability to stick in viewers‘ minds and integrated lead capture tools, B2B video marketing can generate qualified leads and move them closer to making a purchasing decision.

Enhanced customer education and support

Video can be a powerful tool for educating customers about your products or services, providing clear instructions, and resolving common issues. By creating comprehensive video tutorials and FAQs, B2B video marketing can improve customer satisfaction, reduce support costs, and open the door for upselling to your most well qualified audience.

Effective thought leadership and positioning

Thought leadership is one of the best ways to establish your brand as an authority in your industry. B2B video marketing provides an excellent platform to establish your brand’s thought leaders in a format that instantly humanizes them. By sharing thought leadership through videos, you can more efficiently position your brand as a trusted source of information and attract potential customers who value your expertise.

91% of businesses used video as a marketing tool in 2023. (Wyzowl)

Why create a B2B video marketing strategy?

As with any marketing approach, establishing a strategy is the only way to ensure your success is measurable. But beyond that, there are a few compelling reasons why a video strategy in particular is worth your time.

Go into deeper detail

Video allows you to go into deeper detail about your products or services than you can with text or images alone. This is especially important for complex B2B solutions that require more explanation. With video, you can demonstrate how your product works, show its benefits, and address any potential concerns that your audience may have — all within a media format that holds your audience’s attention.

Offer content for a wider range of learning styles

Text is one of the easiest ways to convey your message, but it is far from the only way people consume information. Consider the online acronym TL;DR: too long, didn’t read. Many people are visual learners, and they prefer to learn by watching videos. Others are auditory learners, and they prefer to learn by listening to audio or podcasts. Still others are kinesthetic learners, and they prefer to learn by observing or doing. Video can accommodate all of these learning styles, making it an effective tool for reaching a wider audience.

Richer analytics

Analytics for tactics like content marketing, PPC, and email all tend to be rather binary: either the user took an action or they didn’t. Video, on the other hand, provides analytics data about all the standard binary metrics, but it also provides details about view-through rates.

This metric in particular lets you know whether your viewers are actually watching your content, and at what point they are dropping off. These are invaluable insights for fine tuning your video marketing content, which will ultimately help it perform even better.

96% of video marketers say video marketing has increased user understanding of their product or service. (Wyzowl)

6 key steps in building an effective B2B video marketing strategy

Define your objectives

Before you start creating videos, you need to clearly outline your goals for your B2B video marketing efforts. Whether it’s increasing brand awareness, generating leads, or educating your audience, having clear goals will help guide your strategy and determine how to measure your success.

Conduct audience research

To make video content that is relevant and engaging to your target audience means you need to understand who they are, what their pain points are, and how their buying decisions are made. These insights will help you tailor your videos to address their specific interests and needs.

Develop a content calendar

Consistency is key to building any aspect of brand identity, and video marketing content is no different. Create a content calendar to plan your video production schedule and ensure a consistent flow of content. Map out the types of videos you’ll create, their target audience, and the planned release dates. This will help you stay organized and ensure you’re producing videos that align with your overall marketing goals.

Choose the right platforms

Whether it’s LinkedIn, YouTube, or other industry-specific platforms, you need to identify the platforms where your target audience is most active. This will help you tailor your video strategy to the platforms your audience frequents, which means they are more likely to see and engage with your videos.

The top three video distribution channels for marketers in order are social media, websites, and YouTube. (Vidyard)

Optimize for search and sharing

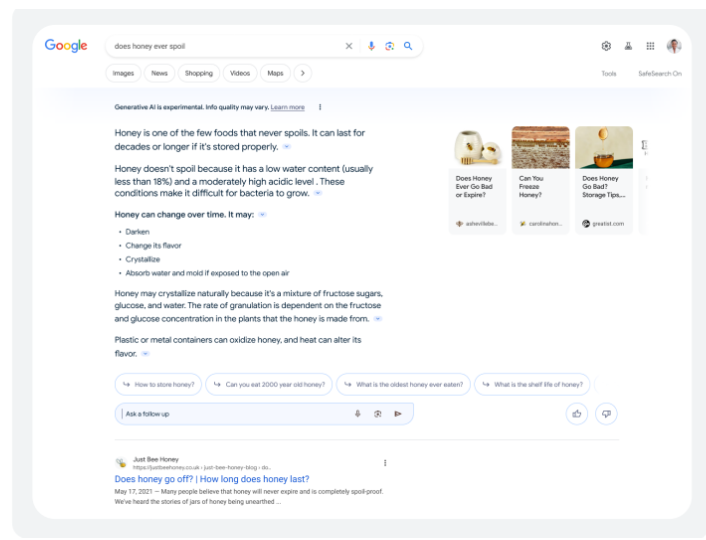

Video content is increasingly featured among the top results on Google. Optimizing the titles and descriptions of your videos can positively impact your website’s ranking. Optimize your video titles, descriptions, and tags with relevant keywords to improve their search engine visibility. Encourage sharing by including social media buttons and calls to action within the videos and on your website. Learn more on our blog about optimizing YouTube content for SEO.

Establish and track KPIs

Regularly analyze the performance of your B2B videos using analytics tools. Determine which metrics will define success for your video marketing campaign and track them regularly to assess the effectiveness of your strategy. Metrics typically tracked for B2B video include total views, view through rate, engagement, and conversion rates.

Lay the groundwork for sustainable success with video marketing as part of an integrated marketing approach: learn more about Strategy and Planning services from TopRank.

The post How to Build a B2B Video Marketing Strategy appeared first on TopRank® Marketing.

Source:: toprankblog.com